AI Transparency Issues and Explainable AI: A Complete Guide

Introduction: Understanding AI Transparency Issues in the Modern World

Artificial intelligence is transforming industries at an unprecedented pace. However, as AI systems become more powerful, AI transparency issues are becoming increasingly critical. Simply put, AI transparency refers to how clearly people can understand how AI systems work, how decisions are made, and why outcomes occur.

Moreover, businesses, governments, and users now rely on AI for decision-making in healthcare, finance, hiring, education, and security. Therefore, when AI systems operate as “black boxes,” trust quickly erodes. Consequently, transparency is no longer optional—it is essential. Future of AI: Most Important AI Trends 2026

In this detailed guide, we will explore AI transparency issues, their causes, real-world examples, ethical risks, regulatory challenges, and best practices. Additionally, we will explain how transparent AI supports trust, fairness, accountability, and compliance, aligning fully with E-E-A-T principles.

What Is AI Transparency?

AI transparency means that AI systems are understandable, explainable, and open to scrutiny. In other words, stakeholders should be able to know:

- How data is collected and used

- How algorithms process information

- Why specific outputs or predictions are generated

- Who is responsible for AI decisions

Furthermore, transparency ensures that AI decisions can be audited, challenged, and improved. As a result, it becomes easier to identify errors, bias, or misuse.

Why AI Transparency Issues Are Increasing

AI transparency issues are growing rapidly for several reasons.

1. Complexity of AI Models

Modern AI models, especially deep learning systems, are highly complex. Therefore, even developers sometimes struggle to explain their outputs. Consequently, transparency suffers.

2. Proprietary Algorithms

Many companies treat AI models as trade secrets. However, this lack of openness creates ethical and legal concerns, especially in high-impact applications.

3. Massive Data Usage

AI systems rely on vast datasets. Unfortunately, data sources are often unclear. As a result, users don’t know where data comes from or how it is processed.

4. Rapid Deployment

Because organizations rush to deploy AI for competitive advantage, transparency measures are frequently overlooked. Therefore, accountability gaps emerge. AI Learning Path: Complete Roadmap from Beginner to Expert

Key AI Transparency Issues Explained

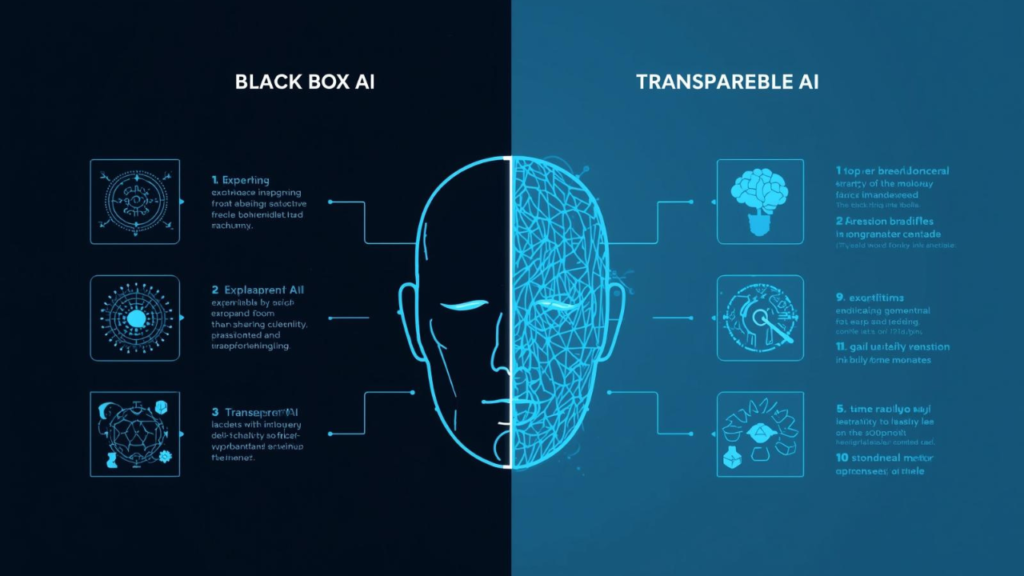

1. Black Box AI Systems

One of the most serious AI transparency issues is the black box problem. These systems produce outputs without clear explanations.

As a result:

- Users cannot verify decisions

- Bias remains hidden

- Errors are difficult to correct

For example, if an AI denies a loan application, users deserve to know why.

2. Lack of Explainability

Explainable AI (XAI) aims to clarify AI decisions. However, many systems still lack clear explanations.

Consequently:

- Trust decreases

- Regulatory compliance becomes harder

- Ethical risks increase

Therefore, explainability is a core pillar of AI transparency.

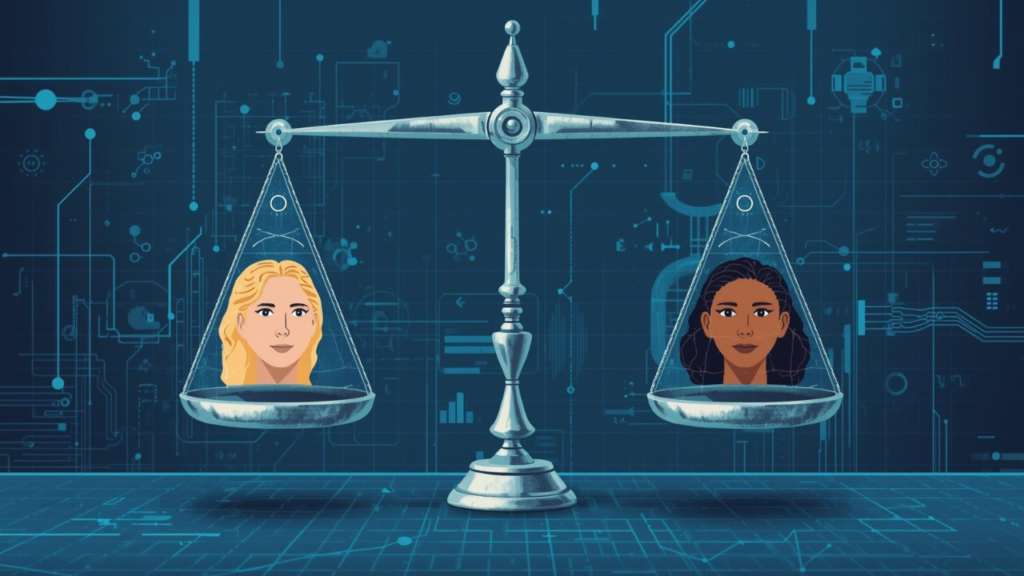

3. Hidden Bias in AI Models

Bias often originates from training data. However, without transparency, biased outcomes remain unnoticed.

For instance:

- Hiring algorithms may discriminate

- Facial recognition systems may misidentify minorities

- Credit scoring AI may unfairly penalize groups

Thus, transparency helps detect and correct bias early.

As a result, users cannot assess whether AI decisions are fair or lawful. Therefore, data transparency is essential. How to Use AI Tools Effectively: A Complete Beginner-to-Advanced Guide 2026

4. Accountability Gaps

When AI makes mistakes, responsibility becomes unclear. Is it the developer, the company, or the algorithm itself?

Without transparency:

- Legal accountability is weak

- Ethical responsibility is blurred

Thus, clear documentation and governance are crucial.

Why AI Transparency Is Critical for Trust

Trust is the foundation of AI adoption. Without transparency, users hesitate to rely on AI systems.

Transparency Builds Confidence

When users understand how AI works, they feel more comfortable using it. Consequently, adoption increases.

Transparency Supports Ethical AI

Ethical AI depends on openness, fairness, and responsibility. Therefore, transparency directly supports ethical frameworks.

Transparency Enhances User Control

When users understand AI decisions, they can challenge, correct, or opt out. As a result, user empowerment improves.

AI Transparency and E-E-A-T Principles

AI transparency aligns perfectly with Google’s E-E-A-T framework.

Experience

Transparent AI systems reflect real-world testing and feedback. Therefore, they improve over time.

Expertise

Clear documentation demonstrates technical expertise. Moreover, it shows deep understanding of AI systems. AI for Business Growth: Smart Strategies to Scale Faster in 2026

Authoritativeness

Organizations that prioritize transparency gain industry credibility. Consequently, they are seen as trustworthy leaders.

Trustworthiness

Ultimately, transparency builds trust. Without it, AI systems lose legitimacy.

Real-World Examples of AI Transparency Issues

Healthcare AI

AI systems diagnose diseases and recommend treatments. However, without transparency:

- Doctors cannot validate results

- Patients lose confidence

Therefore, explainable medical AI is essential.

Financial Services

Banks use AI for credit scoring and fraud detection. However, opaque systems can lead to unfair rejections.

Thus, regulations increasingly demand transparent financial AI.

Recruitment and HR

AI hiring tools screen candidates. Yet, hidden bias can exclude qualified applicants.

Transparency ensures fairness and diversity in hiring.

Law Enforcement

Predictive policing AI raises serious concerns. Without transparency, it may reinforce systemic bias.

Therefore, public oversight is critical.

Regulatory and Legal Challenges Around AI Transparency

Governments worldwide are responding to AI transparency issues.

GDPR (Europe)

The GDPR includes the “right to explanation.” Therefore, users can ask how automated decisions affect them.

EU AI Act

The EU AI Act mandates transparency for high-risk AI systems. Consequently, compliance is becoming mandatory.

Global Regulations

Countries like the US, UK, and Canada are also drafting AI transparency laws. As a result, global standards are emerging. What’s New in the AI Ecosystem? 2026 Trends You Must Know

Explainable AI (XAI): A Solution to Transparency Issues

Explainable AI focuses on making AI decisions understandable.

Key XAI Techniques

- Feature importance analysis

- Model simplification

- Visualization tools

- Human-readable explanations

Therefore, XAI bridges the gap between performance and transparency.

Best Practices to Improve AI Transparency

1. Use Clear Documentation

Document data sources, algorithms, and decision logic.

2. Implement Explainable Models

Choose interpretable models where possible.

3. Conduct Regular Audits

Audit AI systems for bias, errors, and compliance.

4. Disclose Limitations

Be honest about what AI can and cannot do.

5. Involve Stakeholders

Include users, ethicists, and regulators in AI design.

Benefits of Transparent AI Systems

- Increased user trust

- Better regulatory compliance

- Reduced legal risks

- Improved decision quality

- Stronger brand reputation

Therefore, transparency is not a weakness—it is a competitive advantage.

Challenges in Achieving Full AI Transparency

Despite its importance, transparency is difficult.

Trade-Off Between Accuracy and Explainability

Complex models may be more accurate but less explainable.

Intellectual Property Concerns

Companies fear revealing proprietary information.

Technical Limitations

Some AI models are inherently difficult to interpret.

Nevertheless, partial transparency is better than none. The Future of AI Cyber Defense: Essential Security for 2026

The Future of AI Transparency

Looking ahead, AI transparency will become a standard expectation, not a bonus feature.

- Regulations will tighten

- Users will demand explanations

- Transparent AI will outperform opaque systems

Therefore, organizations that invest early will benefit most.

🚀 Call to Action – Grow Your Rankings with Expert SEO Support

If you want higher rankings, better traffic, and long-term growth, now is the right time to invest in professional SEO. I specialize in keyword research, on-page SEO, and SEO-optimized content writing that aligns with modern search engines and AI-driven algorithms. Moreover, every strategy is data-driven, user-focused, and designed to improve visibility, engagement, and conversions.

Therefore, whether you need targeted keywords, fully optimized pages, or high-quality content that ranks and converts, I am here to help. Most importantly, my approach follows E-E-A-T principles, ensuring trust, relevance, and long-term results.

📩 Let’s grow your website the smart way.

Contact me today at digitalminsa@gmail.com to discuss your SEO goals and start ranking with confidence.

Frequently Asked Questions (FAQ) – AI Transparency Issues

What are AI transparency issues?

AI transparency issues refer to the lack of clarity about how AI systems make decisions, use data, and produce outcomes.

Why is AI transparency important?

AI transparency is important because it builds trust, reduces bias, ensures accountability, and supports ethical and legal compliance.

What is black box AI?

Black box AI describes systems where inputs and outputs are visible, but internal decision processes are hidden or unclear.

How does AI transparency reduce bias?

Transparency allows developers to examine data and algorithms, making it easier to detect and correct biased patterns.

Is AI transparency required by law?

In many regions, yes. Laws like GDPR and the EU AI Act require transparency, especially for high-risk AI systems. Complete AI Tools Demo Review: Performance, Pricing & Real Results

What is Explainable AI (XAI)?

Explainable AI refers to techniques that make AI decisions understandable to humans.

Can AI be fully transparent?

While full transparency is difficult, meaningful and practical transparency is achievable and highly beneficial.

Who is responsible for AI transparency?

Responsibility lies with developers, organizations, policymakers, and regulators collectively.

Does AI transparency affect performance?

Sometimes, but the trade-off is often worth it because transparency improves trust and usability.

How can businesses improve AI transparency?

By using explainable models, documenting processes, auditing systems, and communicating clearly with users.

Conclusion: Why Solving AI Transparency Issues Is Essential

In conclusion, AI transparency issues represent one of the biggest challenges in modern artificial intelligence. However, they also present an opportunity. By embracing transparency, organizations can build trust, improve ethics, and ensure long-term success.

Moreover, transparent AI aligns with E-E-A-T principles, strengthens SEO and AEO performance, and meets growing regulatory demands. Therefore, transparency is not just a technical requirement—it is a strategic imperative.

If you want AI to be trusted, adopted, and respected, transparency must come first.

Post Comment

You must be logged in to post a comment.